Introduction

Adam: Welcome to CoRecursive, where we bring you discussions with thought leaders in the world of software development. I’m Adam, your host.

Philip: I’m going to stick with Russell here, and so our dedication should be to truth rather than to efforts to be widely known.

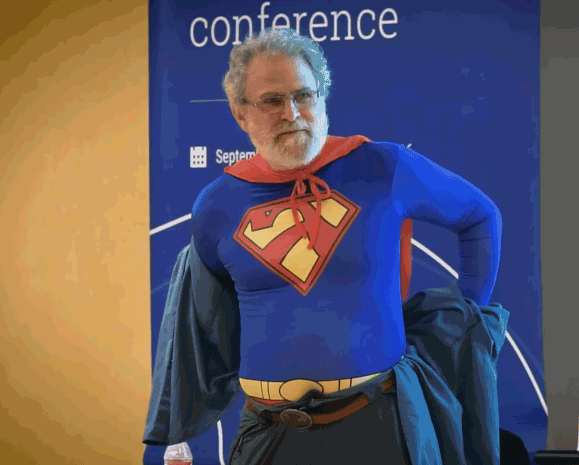

Adam: Hey, Phillip Wadler is a very accomplished computer scientist, a great communicator, and also occasionally dresses up as his alter ego Lambda Man. He has a paper and a talk where he gets close to saying something like functional programming languages are superior to other languages. Today’s interview, along the way to arguing this point, we explore computer science history, lambda calculus, alternate universes, space aliens, and what programming language a theoretical creator of the universe would use.

Phil is a super interesting guest and the bits of computer science history we touch on I find really fascinating. Before we start the interview, if you are enjoying the podcast, please rate or review us on iTunes, it really helps.

So I have this paper of yours here, I’m going to shake it so maybe they can hear, Propositions as Types. And there’s also this great presentation you’ve done a couple of times about it. So I wanted to talk to you a bit about it. I found it to be very interesting. I highlighted one section here in the introduction that says, “Those of us that design and use programming languages may feel that they’re arbitrary, but Propositions as Types assures us that some aspects of programming are absolute.” It’s kind of a big statement, I would say.

Programming Languages Are Absolute

Philip: Yeah. Yeah, it is sort of a big thing and I know many people disagree with me, but for me this is highly motivating. When I first understood about Propositions as Types, well actually the first time I saw it, I just thought, oh, that’s just a funny coincidence. That’s cute, but of no significance. But after a while, it became clear that it wasn’t just between a particular system of computation and a particular system of logic, but rather pretty much any system of logic you could name had a corresponding system of computation and vice versa. And even that sometimes looking at interesting logics could give you interesting systems of computation. So it ended up becoming a theme of my research career, probably the most important theme in my research career.

And yes, I feel very strongly about it, that this is telling us something quite useful and quite important. And it’s very often, computer science is a horrible name, right? It’s horrible for two reasons. It’s horrible because it has the word computer in it. That’s just pointed out they’re calling it computer science is a bit like calling astronomy, telescope science, right? It’s not the telescopes that are interesting, it’s what you can see through them. And then the other thing that’s wrong with the name is that we have science in it. If you’re a real science, like physics or astronomy, you don’t feel a need to put science in your name. It’s only if you have an inferiority complex and feel that people won’t take you seriously as a science that you need to put science in your name.

Computer Science is a Horrible Name

So I much prefer names like computing or informatics, which is the fancy name we use for it at Edinburgh. And it’s relatively rare that you see things that make you think, oh, this is getting a deep insights of the kind that you get in physics or mathematics. But this, even though it’s really very shallow, it’s almost just a pun, it feels to me like it’s getting at something deep and important.

Adam: So let’s back up and let’s try to get to that place. So, what is computability theory?

What Is Computation?

Philip: What is computability theory? So that’s the one other claim I think, or was certainly the first claim that computing made to say there’s really something interesting here with content. And the beginning of that was the observation that you could build a universal computer, that there was a way of, well, actually, no, it goes back further than that, that you could come up with a notion of computation, a notion that would capture anything that a computer could do. That was the big thing. And it’s quite interesting because three different groups did it. There were three different independent definitions that came along. One from Church, one from Godel, and one from Turing. So Godel and Church knew a little bit about each other, Turing was completely separate, it was something he did basically as an undergraduate student, one of the most cited papers ever was done by Turing as an undergraduate student. The fact that three different people found the same thing independently, really gets you a lot of evidence that you’re onto something.

Lambda Calculus

Adam: And so the thing they found is I guess, the Turing machine is probably the term I’m most familiar with.

Philip: Right, so there are three different terms. Church’s term is lambda calculus, which you might’ve heard of. Godel’s term is recursive functions. And what Turing came up with is what we now call Turing machines. And the point was that all three of those had exactly the same computational tower. And then Church’s thesis says, “This is what you can do with a computer.” And Church should’ve just boldly asserted it. And many people looked at lambda calculus and they went, “Wait, no, why should this correspond to what a computer can do?” And of course this was back in the 1930s, this was before they had digital computers. They did have computers. There were things around called computers. If you’ve seen the movie, Hidden Figures, about early work at NASA, they had a room full of computers. These computers were women that sat around doing computations.

Women Were the First Computers

And one of the interesting things is that a lot of this work, which is actually fairly sophisticated work, for some reason they decided that, “Oh, we’ll just let the women do it.” And so when the first computers came along, they said, “Oh, well, the women have been acting as computers, we’ll let them program it.” And there was an interesting stage where people suddenly went, “Oh, actually programming computers is really interesting. This isn’t women’s work, this is men’s work.” And there was this whole big shift to push women out. And now of course we’re very keen to bring women back in and to say, “No, we need a diverse range of views here. We don’t want just a bunch of geeky men doing this, we want a diverse range of people doing it and trying to restore the balance again that we, it happened balanced the other way at first and then very much unbalanced, and-”

Adam: So it only took us 70 years.

Philip: It was quite a waste that the way I first heard about it is when I was in high school. One of my friend’s mothers had been one of the early computer programmers, and so it was first from her that I heard about this history and it was her who said, “Oh, well, when the men figured out it was interesting, they kicked us out.” And eventually I found other historical documents describing that. And this is really something that we need to fix now.

Adam: Definitely. I definitely agree with that.

Philip: So, anyhow, computer didn’t use to mean a machine, it meant a person, as it turned out a woman, sitting down doing computations, following a fixed set of rules. And then the question was, well, what do mean by what you can do following a fixed set of rules? The fancy name for that being algorithm. And there were three different definitions that came out, as I said Church’s, which is lambda calculus. And people just looked at that and said, “Why should that correspond to what people could do?” And then Godel’s, where, in fact, what happened was Godel went to Church and he said, “I don’t believe your definition.” And Godel said, “Fine. If you think my definition is wrong, you come up with your own definition.” Probably not quite as testily as I put it. Maybe it was even more testy, I don’t know.

And so Godel then came up with recursive functions and the paper on it was actually notes written by Church’s student, Kleene, who also became famous in logic and computing. So Kleene took notes as Godel presented this at Princeton where Church was, at the Institute for Advanced Study. Oh, sorry, no, this was before there was the Institute for Advanced Study. This was before the war. The Institute for advanced study was founded during the war at Princeton because there were all these German Jews that wanted to get out of Germany like Albert Einstein and Princeton wanted to attract them, but they were antisemitic enough that they didn’t want to actually have them at Princeton so they created the Institute for Advanced Studies. So it’s very prestigious institute now, but I’ve been told that the reason it exists is due to antisemitism.

So going back to the story, pre-Institute of Advanced Studies, Godel came to visit at Princeton and he said this to Church and Church said, “Fine. You come up with your own definition.” Godel gave a series of lectures. It was actually printed as a paper written by Church’s student, Kleene, who took notes at the lectures and attributed it to Godel and they then sat down and they proved the two were equivalent. And when Church went back to Godel and said, “Look, your definition is actually the same as my definition,” Godel said, “Oh, is it? I must be wrong then.”

And the impasse was resolved by this young undergraduate student, Turing, who came up with his own definition, Turing machines, and unlike Church and unlike Godel, Turing wrote a whole section of the paper explaining why what a turning machine can do should be the same as what a computer that is a person following a set of rules should be able to do. And so the notion that anything that a computer can do can be done by lambda calculus or by recursive functions or by a Turing machine is often referred to as Church’s thesis, because it was just plunked out there, here, this is what they can do, but Turing actually gave evidence.

And there are people who claim, well, it shouldn’t be called Church’s thesis, it should be called Turing’s theorem, because some people have classified the sort of argument that Turing gave is saying, well, let’s assume that what people can do are this, this and this, and under those assumptions, he then actually, as it were proved in mathematical serum showing, so what they can do is exactly what a Turing machine can do.

Gödel, Undecidability and the Halting Problem

Adam: And at some point this all bumps up against, like isn’t Godel’s undecidability and halting problem.

Philip: That’s right. It was Godel’s proof of undecidability that got this all going because before Godel proved undecidability, everybody thought that what you’d be able to do is build a computer that could resolve any question in logic. And Hilbert gave a famous speech where he ended by saying, “We will know. We must know.” I’m talking about this idea that logic could do anything. And implicit in this, the notion that you could have a computer program as it were, although again this is just before there were computers that would solve any logically well expressed problem for you. And ironically, the day before Hilbert gave that speech was when Godel, at the exact same conference, presented his proof of undecidability. And so some people think it’s ironically very appropriate that what appears on Hilbert’s tombstone is that phrase, “We will know. We must know, [crosstalk 00:13:47] must know. We will know.” Yes.

Adam: And somehow, as I understand it, like undecidability has something to do with kind of this riddle of like the barber who shaves.

Philip: There’s a barber who shaves everybody who doesn’t shave themselves. Who shaves the barber?

Adam: And so that statement kind of, if you think it through, it kind of bounces back and forth between, he shaves himself but he can’t shave himself.

Philip: Right, because if he shaves himself, well, he only shaves people who don’t shave themselves, so if he shaves himself, that can’t be right. Okay, so then he doesn’t shave himself, ah! But he shaves everybody who doesn’t shave themselves, so he must shave himself. Right. It’s very similar to that. The fancy name for the barber’s riddle is Russell’s paradox. So there’d been a lot of work on set theory.

People basically said, “Any well-defined property defines a set,” and Russell said, “Wait a minute.” So, in particular, this was in the work of Gottlob Frege, was one of the earlier formulators of logic, he did very important work. And also, sad to say, a noted anti-Semite. Frege was writing this huge work on logic and Russell was, again, a young student, I’m not sure if he was an undergraduate, but certainly a young student, and he discovered that what Frege was doing had a paradox in it.

And the paradox was this, if any well-defined property defines a set. Let’s consider the set X consisting of all elements, such all elements, little Y, such that little Y is not in big X. Okay, so is big X in big X or not, and because a set might contain itself. And the answer is, well it’s in itself if and only if it’s not in itself, just like the barber’s problem. And this completely undid all of Frege’s work.

And there’s a source book called From Frege to Godel, a source book in mathematics by a man named van Heijenoort. And in that, he reprints Russell’s letter to Frege, along with a commentary by Russell about this and I’ll actually look it up and read it to you because it’s beautiful. Let me read to you what Russell actually said about this. So Russell’s letter to Frege is just a page long, explaining all this. Russel wrote a very nice paper.

So this was actually the beginning of what we call type theory, the way that Russell’s solved the paradox was by introducing what we now call types. In fact, what Russell called types so that you, it didn’t make sense to talk about a set billowing into itself and ruled out some other things as well. So this was actually very important, but Russell’s paper on type theory is beautiful because it begins with 10 pages of different stories all corresponding to what we were just talking about. So one is the barber’s paradox. One is, there’s a special name for a kind of word that whether the word refers to itself or not. And then he came up with a special name for word that doesn’t refer to itself that has the same paradox and so on. So it goes on for like 10 pages with variants of this paradox and it’s very readable, right?

We never have technical papers these days that are as readable and entertaining as Russell’s introduction to type theory and we really need more of that. So anyhow, here’s what Russell said to van Heijenoortt, “Dear Professor van Heijenoort, I should be most pleased if you would publish the correspondence between Frege and myself, and I’m grateful you for suggesting this. As I think about acts of integrity and grace, I realize that there is nothing in my knowledge to compare with Frege’s dedication to truth. His entire life’s work was on the verge of completion. Much of his work had been ignored to the benefit of men infinitely less capable. His second volume was about to be published, and upon finding that his fundamental assumption was an error, he responded with intellectual pleasure, clearly submerging any feelings of personal disappointment. It was almost superhuman and a telling indication of that, of which men are capable if their dedication is to creative work and knowledge instead of cruder efforts to dominate and be known. Yours sincerely, Bertrand Russell.”

Adam: Wow, so he took it well.

Philip: That’s a beautiful story.

Types Existed Before Computers

Adam: So an interesting or a confusing thing, I guess to me, I guess, would be, so we’re in the 1920s, 1930s besides misogyny and antisemitism, these men of the time come up with like type theory, but types when I first learned to see like types were like a representation of the width of bits that I’m storing somewhere, but we don’t even have computers at this point, but yet types exist.

Philip: Yes, and interesting, so, I will address that in a moment, but let me go back for a moment because I’ve gone off on all these tangents, let me tell you the rest of the story about Godel. So, as you said, Godel came up with something very much like those paradoxes, but it was slightly different. So what he did, was he said, “Consider this statement,” the statement, “This statement is not provable.” Okay, so if it’s true, then it’s not provable and you have something true that’s not provable, that’s unfortunate.

Okay, let’s say it’s false. So if false, then it is provable and you’ve proved something that’s false. It really undermines the definition of proof, right? And even the Nazis, right? People like Gentzen who was a Nazi, but was what developed really interesting bits of logic. The idea that you could prove something that was false, that they really were against that. I’m not sure people in the Trump administration would be against that, but certainly in the early days of Germany they were very much against that. So you couldn’t have that, right? That would just undermine the definition of proof. So it had to be the other possibility, but that meant that there was a true statement that was not provable. Of course the trick was, how do you show that you can write a statement that says this statement is not provable.

And what Godel had done was he showed how to encode logic as numbers. So he came up with this thing called Godel numbering, where basically you use the prime factorization of a number as a way of representing a string of symbols. So he could take any string of symbols and encode it as a number, and so statements like this is a well-formed statement of logic became a statement about a number, or this number actually represents the proof of a statement of logic represented by this other number became a statement of logic, sorry, it became a statement about numbers. And then he showed that indeed there was a number that corresponded to the statement, “This statement is not provable.”

Adam: It’s kind of a trick.

Philip: Yeah, but it was because, so that was a statement about numbers, right? The statement, “This statement is not provable,” had a particular number. I don’t know that anybody’s actually computed the number, that would be a fun thing to do, oh, I should set that as an assignment for a student to compute the actual number that means that is the number of the statement, “This statement is not provable.” But there is such a number, a finite number, and that’s what gets you into trouble. So that’s how they showed that things were undecidable, so there were some things that you can prove. At that point, people went from thinking, oh, hmm, maybe there’s not a computer program that can solve every possible question of logic.

And in fact, again, the example they came up with is what we now call the halting theorem. And we normally think of it as Turing’s halting theorem, but it was actually earlier proved the exact same result by Church. And again, you can show there’s a question that no computer program can answer. And the question is, given an arbitrary computer program, does it halt or not? And the reason why, again, is if you had such a thing, you could very trivially computer program that takes itself as input, that takes its own text as input, and therefore corresponds to this program halts if an only if it doesn’t halt, the same paradox again. So to prevent you formulating that paradox, it has to be the case that there is no general solution to the halting problem.

Adam: And these all involve sort of a self reference, or at least the halting problem involves like a program looking at a program, the Godel system involves encoding rules within, like there’s a self-reference theme.

Philip: Yes. Yes, self-reference is definitely part, and self-reference is very important in computing. Anytime you’re using recursion, you’re defining a function in terms of itself, that’s an example of self-reference. And in fact, if you look at the particular lambda term that encodes this idea of recursion, it’s something called the fixed point combinator or the Y-combinator, which happens to be the name the early logicians gave to it. It’s the same thing written twice next to itself, which is what allows it to do this kind of self-reference. And actually looking at that structure, I thought that looks a lot like the structure of DNA and the way in which DNA allows it can be replicated. Indeed, if you look at the letter Y, right? It looks like a strand of DNA unzipping.

Adam: That’s true.

Philip: I think there’s some interesting work to be done showing that anytime you have self-reference, it actually involves two copies of the same thing. But I don’t know a fruitful way into proving that, I don’t know anybody that’s done that, but I think there might be something interesting to be done there.

Adam: So we go from, to bring it back, if we go from lambda calculus and now we have types introduced, like what does that give us?

Philip: Right. So you’ve got all these paradoxes, oh, by the way, it’s because there’s this sudden rush after Godel proved this, that you get three people giving definitions of computability at that time, because as soon as Godel proved his result, people thought, oh, you probably can’t decide everything with the program, but to give a proof of that, you need a definition of what a computer can do. So before that, people didn’t care about the definition of what a computer could do when they said, “There is a computer that can decide,” no, they conjectured there’d be a computer program that could decide whether any statement of logic had a proof or you could prove it’s negation. So they referred to that as it would be effectively decidable.

And if you had such a program, you could just look at it and go, “Yep, that’s a program,” would be sort of like Justice Stewart’s definition of pornography, “I know it when I see it.” But if you want to show, no, there’s no way of doing this with a computer program, then you have to know what computer programs can do, and then you need a formal definition of a computer program, and that’s why you had three different definitions of computability coming out at the same time, because there’s this rush to suddenly say, “Wait, to prove it’s undecidable we needed a definition of a computer, how do we define that?” So it’s not an accident that you had three at the same time.

Adam: Have you heard this term, the adjacent possible, before?

Adjacent Possible

Philip: No, I have not.

Adam: Okay, so it’s an interesting term from people who looked into like things that are co-invented, like the telephone, for instance, so invented in two places at once, right? But it required a proper understanding of like electricity and a couple other concepts, and once those concepts were laid down, then the telephone was like adjacently possible. Like everything to come up with that concept was already available, it was just one step. So then I think that’s a similar concept here.

Philip: Yes, that’s exactly right. So very often when you have the same thing invented several times in a row, sorry, several times in near succession. It’s exactly for that reason. And in fact, I remember reading a paper about that, which had a list of about 10 different things that were all independent inventions, very close to each other in time of these different things, the phone being one of them, and if I remember the story right, it turned out that as Alexander Graham Bell was walking down the steps of the patent office, having just patented the phone, somebody else was walking up the steps about to [inaudible 00:28:06] the phone.

Adam: Yeah, I think I’ve heard a similar story.

Philip: So yes, that happens a lot. And in fact the thing we’re about to talk about, propositions as types, seem similar and they, again, you end up with two people independently inventing the same thing, one doing it as part of computing and one doing it as part of logic, and doing it at about the same time. But in that case, I don’t think it’s about the adjacent possible because there’s not a single development that was enabling both of those things to happen at that time. And sometimes it was quite a while before people realized they had done the same thing.

Invention vs Discovery

Adam: Are you using a term from your paper? Are these concepts invented or discovered?

Philip: Right. So in the paper I say, well, the fact that two different people working independently come up with the same thing, suggests that the right thing to call this is discovered. That there’s something fundamental there that people are finding and other people prefer to say, “No, it’s just, this reflects the human mind,” and they both had human minds, they both invented the same thing. I’m not even sure if that’s a fruitful debate to have, if you just come… I like using the word discovered, but if you come away just thinking, there is something fundamental here that’s not arbitrary, and that arises, somehow is it part of the structure of the universe? I don’t know. But there’s something quite important here, then I’m happy. I don’t really care whether you use the word discovered or not. I find that the word discovered is a good way of conveying this.

Adam: Yeah, and you touch on a number of examples, not just this one, I think in your paper, like type inference and, sorry, I don’t have this in front of me, like the ML type system, I guess, has some roots in an earlier logician or?

Philip: Yes. So, but let’s go back for a moment before talking about that one and talk about the first one.

Adam: Sure. Okay.

Philip: So the very first thing, which was, is to note that if you look at the way that Gentzen formulated logic in a paper he wrote in 1935, and you look at Church’s lambda calculus, and in particular, the first type system that was attached to Church’s lambda calculus, which was done in the 1940s by Church, because he’d used lambda calculus service as a macro language for writing down statements and logic and then he found out because it supported recursion, you could write down the statement A, which is equivalent to A implies A, which it was already known due to Haskell Curry, had worked out something called curious paradox. It says, if you can ever write down such a statement, then you can prove A, and if you could write down such a statement for any statement of logic, you could prove any statement of logic, so you’d better not be able to write down these statements.

That was sort of okay, because they were, in effect, infinitely long. Maybe you want to rule out things that were infinitely long, but by just allowing arbitrary lambda expressions, you could actually write down a statement with this property, so his logic became inconsistent. So that’s why he introduced type theory for much of the same reason that Russell had introduced type theory types to sets before Church introduced types to lambda calculus for this purpose.

And you were saying before, “Oh, yeah, but people ended up using types for very different things meaning, like how many bits were in a word?” And one thing I’ve not been able to track down is the direct line between using type the way Russell and Church used it and using type the way Backus used it in Fortran, which I think was the first use of the kind you’re describing. But I suspect, that Backus in fact knew about the work of Russell and Church, at least indirectly. Turing, certainly knew about those and Turing started using the word type for these things, so Turing is probably the connection, but I was never able to, John Backus unfortunately died before I got around to try thinking, oh, I should ask him if he got this from Turing, so I don’t know if he did, but I suspect he did. And that’s why we use the same word for both of these things.

Adam: But also there, like as you’re saying, there’s some sort of a conceptual isomorphism or something.

Philip: So the way we use types now, certainly in functional languages, corresponds directly to the way that Russell and Church was using it. But the types that appeared in very early computer languages like Fortran, were much more restricted. As you said, there was things like, is this an integer or a floating point? How wide is this numerical representation? How many bits are in it? But I believe that that usage was inspired by looking at the usage of Russell and Church and Turing. So Turing probably made the leap, was the first person to make the leap from one of those to the other.

Adam: The thing that I like about your paper here, I guess, as you’re saying, the fact that typed lambda calculus came about several different ways and has strong roots in logic, I mean, it implies that the languages that follow the structure maybe are following a correct structure or, I don’t even know if you’d use the word correct, but.

Philip: Well, they have more justification than the design of say Fortran or many of the successor languages. So the fact that Gentzen came up with natural deduction in 1935, and Church came up with lambda calculus in 1932, and then simply typed lambda calculus in 1940. And it turns out that simply typed lambda calculus and a subset of Gentzen’s natural deduction, the subset corresponding to implication are identical, they are formally identical, but as far as I know that formal identity wasn’t written down until Howard wrote it down in the late 1960s in a paper that was circulated in Xerox form, and wasn’t published until 1980 in a [inaudible 00:35:48] devoted to Haskell Curry. So it was between 1940 and 1969, that’s close to 30 years before people noticed, wait a minute, these are exactly the same thing.

Logicians and the Fundamental Nature of the Universe

Adam: So there are concepts, maybe you won’t say this, but I’ll say it, there are concepts that are innate to the mathematical underpinning of the universe, and they seem to be invented or discovered because they’ve heard of this underpinning by multiple people and it seems to be always logicians and then eventually computer scientists, is that right?

Philip: Yes. I wouldn’t put it quite as bluntly as you put it, I’m not sure that putting it that bluntly is justified. And if you tend to overstate things, even going so far to just saying that things are discovered rather than invented, you’ll find lots of people willing to argue with you. And as I said, I’m not sure that those arguments are fruitful, but I think if we just want to say, there seems to be something fundamental and important going on here and something interesting and worthy of study, I will settle for that. And yes, this fundamental process is at the core of typed lambda calculus, which is at the core of every functional language. I don’t know anything that has that interesting property of being discovered independently twice that’s at the core of imperative languages or object oriented languages.

God’s Programming Language

Adam: So, you said that I shouldn’t say things so bluntly, but-

Philip: You can do whatever you want, [inaudible 00:37:38] why I don’t find it productive to state things that bluntly.

Adam: Yeah, I really had as a goal is this interview to get you to say typed lambda calculus is God’s programming language and I can put that as a quote.

Philip: I have said that in the past, I’ve said exactly that. And when I said that, somebody got up and walked out of the room because they didn’t believe God had any role in talking about science. And indeed I said it even though I don’t believe in God. I’m Jewish, I regularly attend [foreign language 00:38:13]. Tomorrow is Yom Kippur and I will go to that, but I do not believe in God at all. I’m not a Jewish agnostic, I am a Jewish atheist. As my colleague, Gordon Plotkin, puts it, well, he goes to the Orthodox [foreign language 00:38:32] I go to the liberal [foreign language 00:38:34] through form [foreign language 00:38:36], but, no, sorry, I go to liberal [foreign language 00:38:39]. But Gordon says, “Well, yes, I don’t believe in God but I know exactly which God it is I don’t believe in.”

But yes, saying this is God’s programming language, I think is one way of conveying what I feel like is going on there. For a while it was sort of the only way I could think to phrase it that conveyed it very well, but not unreasonably. Some people got upset with that and said, “No, God shouldn’t be coming in here,” and I can understand why you would say that sort of thing. But then the question is, well, how do you explain this? And I’ve never had and I’m still looking, I think, for the best way of phrasing it.

Adam: Yeah, I think that it makes sense to say that like, in our number system, like prime numbers are what they are like, you don’t invent a prime number, you just verify that it’s prime and maybe there’s some extension of this concept that applies.

Philip: Yes, and that same debate, right? Are prime numbers invented or discovered? You will find people debating that as well, so maybe I should just explain it by saying these languages are prime.

Adam: There you go.

Philip: And maybe that’s a good phrase to use.

Alien Computer Viruses

Adam: So in your paper you actually use aliens to explain this concept. And the movie, I think the Independence Day.

Philip: Oh, yeah, I sometimes used, right, if you look at the movie Independence Day, at one point, it’s actually a remake of Wells’ War of the World. At the end of War of the Worlds, the invading Martians are destroyed because they catch a human virus. And back then, when War of the World was written, people didn’t know very much about biology and how you prevented these things. So the notion that Martians would fall prey to them seemed completely reasonable. Now we would say, “Well, why weren’t they wearing biological shielding when they went to invade the earth?” We would understand this sort of thing.

In Independence Day, the invaders are destroyed by a computer virus that they are infected with by, I think it was Jeff Goldblum. And again, that movie was a while back, we we now know a little bit more about computers and people would say, “Well, why didn’t they have anti-virus software on their computers?”

But also if you freeze frame the movie and look at the bit where he’s downloading the virus, you can see that it’s written in C, it has lots of angle brackets. And in fact the screenshot that I have is a dialect of C that only has open angle brackets, they never got closed. But at the time I saw that movie, I had just moved to Bell Labs and Brian Kernighan again, and Dennis Ritchie were my bosses, and were in offices just down the hall from me, so I thought this is cool, something they’ve done has made it into a very popular movie. So I thought that would be neat. I still don’t know any popular movies that have Haskell in them.

Adam: But the concept-

Philip: But the notion, right, that the alien computer was running C that really doesn’t seem very likely.

Adam: So it should be running something derived from typed lambda calculus.

Philip: Not really. So I don’t think you could give an alien computer a virus by giving it lambda calculus any more than you could give it one by giving it C. But what I do think is let’s say that we tried to communicate with aliens, we’ve done that, right? The Voyager, I think it was, had a plaque on it, trying to communicate with aliens. And you might try to communicate by sending a computer program and maybe we could send a program and see if aliens would be able to decipher it, that might work, or it might not, maybe they would just find that too hard. I think if we sent them a program written in lambda calculus, they would be able to decipher it, that they would probably have gotten to the point where they had discovered lambda calculus as well, because it is so fundamental and that they’d have a much easier time deciphering that then deciphering something written in C.

And in fact, I go on, when I’m explaining this, to say even if you were in a different universe that say had a different gravitational constant or something like that, right? And scientists actually talk about, they point out that the different constants of our universe are finely tuned. If they were a little bit different, matter wouldn’t have accumulated, you wouldn’t have life, there’d be nobody here to observe the universe. Oh, maybe that’s why they’re so finely tuned because we’re here to look at the universe and wonder about it. So they think about, well maybe there are other universities where there are different. I can’t conceive. Maybe it’s just the limitation of my own abilities that’s quite possible, but I can’t conceive of any kind of universe where you don’t have the fundamental laws of logic and therefore where you don’t have lambda calculus, which is isomorphic to the fundamental laws of logic.

And so, yeah, so the way I put this is by saying you can’t call lambda calculus a universal programming language because calling it a universal programming language is too limiting, right? It works in all universes not just in one universe. And I tried to come up with a word for it and I couldn’t eventually, somebody suggested the word to me, omniversal.

Adam: Omniversal. So in the great multiverse, if I were to slip through to some other permutation, then perhaps PHP would not exist, but some functional programming would, that’s kind of the concept?

Omniversal Programming Languages

Philip: Yeah, I suppose the idea would be no matter where you went, you wouldn’t necessarily find PHP, but you would find some descendant of lambda calculus as a programming language. Ooh, that actually sounds like an interesting science-fiction story. Of course what actually happens in any of these stories about parallel worlds, if you find somebody identical to you, they look exactly like you and they’ve had exactly the same life as you, except for one small difference, like there’d be someone exactly like me, but who wasn’t born Jewish or something like that.

Adam: Yeah, like I’ve had a very small version of this, not to do with the multiverse at all, just in the one I was learning in high school, there was a lot of concepts for me to learn. And at some points I got frustrated with them, and then when I was learning Scala, all of a sudden all these concepts were here that I thought were just like weird Haskellisms, they were actually maybe more innate to the way the programs were structured.

Philip: Ah, yeah, that’s a nice version of it, isn’t it? So it means when you go from one programming language to another, you find friends.

Adam: Yeah, the things that a programming language exposes that are kind of part of this innate area, you should find them in other languages that have a similar backdrop or, I don’t know, similar theoretical basis.

Philip: Yes. Now, right, so that’s a nice phenomenon. I expect that probably happens with languages that aren’t functional languages as well, but you raise an interesting question, does it maybe happen more often with functional languages? It might or might not. I don’t know. But if it did, that would give some more evidence that we were onto something fundamental. I have no idea whether that’s true. I don’t even have an intuition that that’s necessarily true. It’s an interesting question.

Adam: It’s super hard to say anything in depth because people who created these languages are often up on all kinds of programming languages and concepts tend to move around and-

Philip: Right, what’s certainly true is if you look at object oriented languages, there’s a huge variety of different object oriented languages. They all have different formal basis. Whereas pretty much every functional language will have lambda calculus as its formal basis, and they will all be organized around very similar principles, whereas for object oriented there’s quite a few different principles really.

Generics Are Innate

Adam: Yeah, and I feel like there’s some principles that are maybe, like some principals have trade-offs but some principals, once you see them in a language, then you don’t want to use language that doesn’t have them. So like generics, for instance, I think come from some of the FP world of like Paramount Tri-City, but now is a pretty mainstream concept, and if you’re familiar with generics and you switched to a language that doesn’t support them, you’re like, “Like this is horrible.”

Philip: Yeah, that’s a nice example, and that definitely came out of the functional programming community and indeed of the basis for it, something called polymorphic lambda calculus, or called System F, it’s one of these things that was discovered twice independently. So the computer scientist, John Reynolds came up with polymorphic lambda calculus as way of getting at exactly what we now call generics. And at exactly the same time, the logician Jean-Yves Girard came up with system F because he was trying to capture some properties of second order logic. And they turned out to be exactly the same thing and it was quite a few years before Girard and Reynolds became aware of each other’s work. And Reynolds even told me that he went to talk at Girard’s university and nobody told him about Girard’s work when he was talking about polymorphic lambda calculus.

Adam: I think, like it seems like sometimes you have to squint to see the, like they’re not direct, it’s not immediately clear that these things correspond, I’m guessing.

Philip: No, actually System F, so you do, I think you do need to squint, right? You need 30 years worth of squinting to see that Gentzen’s natural deduction and Church’s simply typed lambda calculus are the same thing. But, no, as it happens, the correspondence between what Girard did and what Reynolds did was very close, they were both formulated as variants of lambda calculus with abstraction over types, which is what generics are about, like being able to write a bit of code that works at any type.

Adam: So I learned a lot from this paper and the talk is great. In the talk, you have some costume changings, it’s quite exciting.

Philip: Yeah, I’m not going to do that over the radio.

Adam: I assume that you’re wearing some sort of lambda t-shirt right now, we can’t see you, but let’s pretend.

Philip: You can imagine that.

Logicians First

Adam: So I guess for closing thoughts as we wind down, like why is it that these ideas don’t seem to come from computer science first, they seem to be coming from different areas?

Philip: Yeah, the logicians usually get there before the computer scientist. So if you look at these pairings, like the type system that was independently discovered by Hindley and Milner, Hindley was several years before Milner. System F came a couple of years before Reynolds’ system. Many computer languages now are based on the next things Girard did, which was called linear logic. And that was sort of interesting, but then they sort of caught up with each other, and in fact, linear logic was published in a computing journal, not in a mass journal. And it was quite interesting when it was published. It was a whole issue of the journal, more than a hundred pages, and the editor of the journal had to preface it by saying, “We’ve not been able to get anybody to referee this, but it’s so important that we’re going to publish it unrefereed.”

And that was a good decision. That was an extremely influential, and still is an extremely influential piece of work. I have a almost $4 million project funded by the UK government than jointly with Imperial and Glasgow. And that’s essentially developing ideas that come from linear logic to try to apply them to communication protocols. So linear logic has proved to be extremely influential and I think will continue to be for a long time. In The Rust Programming Language, many of the ideas in that are best understood in terms of linear logic. They don’t use linear logic exactly, but it’s coming from the same roots.

Adam: I find logic are something affine.

Philip: They’d be more closer to affine than to linear logic, but again, they’re not affine logic exactly either. The difference between linear and affine is just, in linear logic you must use each fact exactly once, you can’t use it twice, you can’t forget about it, you can’t not use it at all. There’s some older things like relevant logic where you can use things twice, but you can’t forget about them. In others, everything is relevant, so you can’t forget about facts. Affine logic is the other way around, you can forget things, but you’re not allowed to use them twice.

Adam: Which is important because that’s a constraint you don’t really want to have to deal with as a program, like as a developer you don’t want to have to make sure you always use things, I guess.

Philip: That’s why you might want to be linear rather than affine, that’s right. And the reason that you might want to be, say affine rather than relevant, so only use things once, is this corresponds to a limited resource. And in particular it would correspond to not being able to alias something. If you can only use it once you can’t take an alias of it. And that means that when you say sending a message along a channel, you’ve got the only copy of the channel, so you can update it to indicate, okay, I sent this message now, now I’m expecting the next thing in the communication protocol, which is why linear logic is important for doing communication protocols and main method of doing this is something called session types due to Kohei Honda.

When Kohei Honda invented session types for doing protocols, he was inspired by linear logic. And in fact, there are sort of four main things in session types. There’s send a message, receive a message. And the idea is that these are dual. If you’re sending a message at one end of a channel, you must be receiving at the other end of a channel. And then there’s, make a choice or accept a choice. So at one end of the channel, you say, right, I’ve got two alternatives here, I pick alternative A. At the other end of the channel, you’re saying I’m offering two alternatives here called A and B. And again, you want those to match up. So that, make a choice and offer a choice, are actually two connectives of linear logic and Kohei did this in the late 90s. Linear logic had come along, or the mid-90s, linear logic had come along in the late 80s, 1987, and it wasn’t until 2010 that Frank Pfenning and Luıs Caires worked out that actually send and receive could be viewed as the other two connectives of linear logic.

And that then formed the basis for a lot of my research work. That’s why I got involved with a Nobuko Yoshida at Imperial and Simon Gay at Glasgow who were carrying on Caires’ work. And that’s where our big government funded project comes upon what are called session types comes from. So that’s good, I’ve gotten a reference to my day job.

Bringing Monads To Haskell

Adam: Well, I have another reference to your day job maybe, which is, so modal logic and monads, weren’t you at some point involved in bringing monads to Haskell?

Philip: Yes, so monads came from some work done by Eugenio Moggi, who’s currently in Italy, but at the time was a postdoc, I think, here at the University of Edinburgh, working with Gordon Plotkin and he’d worked out that monads were very useful for structuring the denotational semantics of programming languages. And I looked at that and I went, “Oh, they’re just as useful for actually structuring functional programs.” So I was one of the first people to suggest using monads as a way of structuring functional programming. Andy Pitts and Andy Gordon at Cambridge were suggesting very similar things at the same time.

Adam: And it seems like, if it’s based on modal logic, like the guy who invented modal logic was long dead at this juncture.

Philip: Well, it wasn’t based on modal logic. It was just done independently, it came out of ideas from category theory. So monads are a structure that appears in category theory for dealing with things in algebraic topology which I don’t know, so I don’t really understand the connection, but monads are very simple structure. It’s actually very easy to apply instruction in functional programs. And then it turned out that, right? Remember what I said that any interesting idea in computing there’ll be a corresponding interesting idea in logic and vice versa, and it turned out that there was a variant of modal logic that exactly corresponded to monads. And this was discovered by Valeria de Paiva and Gavin Behrman, and I can’t remember the other authors of that paper, but I think there were about four authors that had worked out this exact correspondence. And then later than that, Bob Harper told me, “Well, actually there are lots of different modal logics.” There were I think some given standard names, there was S1 through S8, and Bob said, “Each one of these corresponds to an interesting thing to do in computing.”

Adam: So there’s a rich mine of ideas for programming language concepts if you just look in logic and squint, given 30 years?

Philip: Yes. Waiting 30 years can be important.

The Secret to Good Research

Adam: All right Phillip, so, or Phil, I guess, we’ve gone over, is there any closing thoughts? Would you like to pitch your work or anything you’d like to finish with?

Philip: Well, since we’ve mentioned 30 years, what I will mention is that when we first did Haskell, we never thought that it would become widely used and now it has. And I think this is the secret to having an impact in research, do something good, stick with it, and just stick with it for 30 years. And if you keep teaching it to your students, some of them will go out and do amazing things with it. So I think it’s really important. There’s a lot of work where the government wants people to focus on whatever they think is important right now, like AI and machine learning or something like that, but I do think there’s a lot to be said for finding something fundamental, sticking with it, and it turns out if you wait 30 years or maybe sometimes a hundred years, you’ll find out that that was well worth doing.

Adam: And it gives you a great legacy as well.

Philip: I’m going to stick with Russell here, and so our dedication should be to truth rather than to efforts to be widely known.

Adam: Nice. Thank you so much for joining me, Phil. It’s been very fun and interesting.

Philip: Okay, well, thanks very much. I enjoyed that.

Adam: All right, there we go. That was the interview thanks to so many people who retweeted the previous episode on Twitter or who mentioned it on Reddit or elsewhere. If you liked the show, tell your coworkers when you are hanging around the proverbial water cooler, or if you work remotely like myself, leave a review somewhere. If you have any guests suggestions or feedback, shoot me an email.

Support CoRecursive

Hello,

I make CoRecursive because I love it when someone shares the details behind some project, some bug, or some incident with me.

No other podcast was telling stories quite like I wanted to hear.

Right now this is all done by just me and I love doing it, but it's also exhausting.

Recommending the show to others and contributing to this patreon are the biggest things you can do to help out.

Whatever you can do to help, I truly appreciate it!

Thanks! Adam Gordon Bell